How to build a defensive AI security agent with RAG

Introduction

In my previous post, I walked through a POC of building an offensive AI security agent, where that agent was able to analyze malicious javascript files and identify any potential security vulnerabilities. That agent was able to find 3 such vulnerabilities autonomously in the vulnerable application server hosted locally. If you haven't read that yet, I recommend reading it first.

This post draws upon the previous post as an inspiration to actually do something about the attack. If I put myself inside an organization, as a security engineer, ideally, I'd want to defend my organization against such attacks in real time. But, for POC purposes, I wanted to come up with a situation that was more realistic, relatable and practical to implement. Therefore, we will stick to the assumption that the application server maintains an access log file and keeps a log of all incoming requests to it.

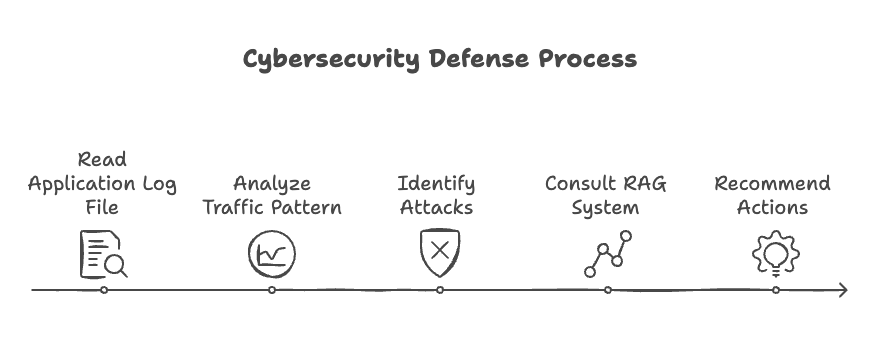

Keeping that in mind, in this post, I will walk through a POC of building a defensive AI security agent that can analyze the logs of the application server, identify attack patterns and then for different types of attack found, it consults an internal knowledge base of security best practices against those particular vulnerability classes. In the end, it follows up with recommendations on how to address the vulnerabilities found. Since this agent talks to a vector DB for RAG (retrieval augmented generation), it is also referred to as an Agentic RAG system and is organizational aware i.e. it is aware of the organization's security best practices and can make recommendations accordingly.

So, lets get started!

Modifying the test lab

Since I've open sourced the code (see Project Cyber Safari section below), instead of pasting the code snippets here, I'll just link to the files here.

The only addition to the test lab from the previous post is that I added logging and logs are now stored on the disk inside the app_logs/app.log file. The code looks like this now. The purpose behind doing this was to have something for the defensive agent to analyze / start with.

Building the agent

Before we start building the agent, just like we did for the hack agent, let's define the goal of the defensive agent. The goal of the defensive agent is to perform the following tasks in the exact order mentioned below:

- Analyze the logs to understand the attack patterns.

- For each attack pattern, consult an internal knowledge base of security best practices against those particular vulnerability classes.

- Follow up with recommendations on how to address the vulnerabilities found.

Automating the entire workflow from the analysis step to the actual remediation step is a pretty big challenge for organizations. The unstructured nature of the logs, myriad of software stacks and deployment patterns and the lack of having the organizational context makes the code fixing problem very daunting and almost impossible to automate. In this POC, I won't go all the way to the code fixing aka remediation step but I'd like to note that that could also be implemented reasonable well with LLMs now.

Technical Details

We first start by defining the tools that the agents will have access to. In this case, I came up with the following tools for the defensive agent:

Tools for the agent

analyze_logs- Analyzes the logs to identify attack patterns. The code for this function/tool is available here.identify_security_controls- Identifies relevant security measures from an internal knowledge base of security best practices. The code for this function/tool is available here. This function initializes thesecurity_kbtool which talks to the vector DB (that has the embeddings of the security best practices) to retrieve the relevant security measures.generate_recommendations- Generates recommendations on how to address the vulnerabilities found. The code for this function/tool is available here.

Next, we define the agent itself and give it access to the tools we defined above

Creating the Agent

Just like the hack agent, I am using the create_react_agent function from Langgraph to create the defensive agent. The code is available here.

Finally, we define the main function and run the agent. All the code, including the instructions on how to run the agent is available here.

Example Screenshots

Find some screenshots of the defensive agent in action below:

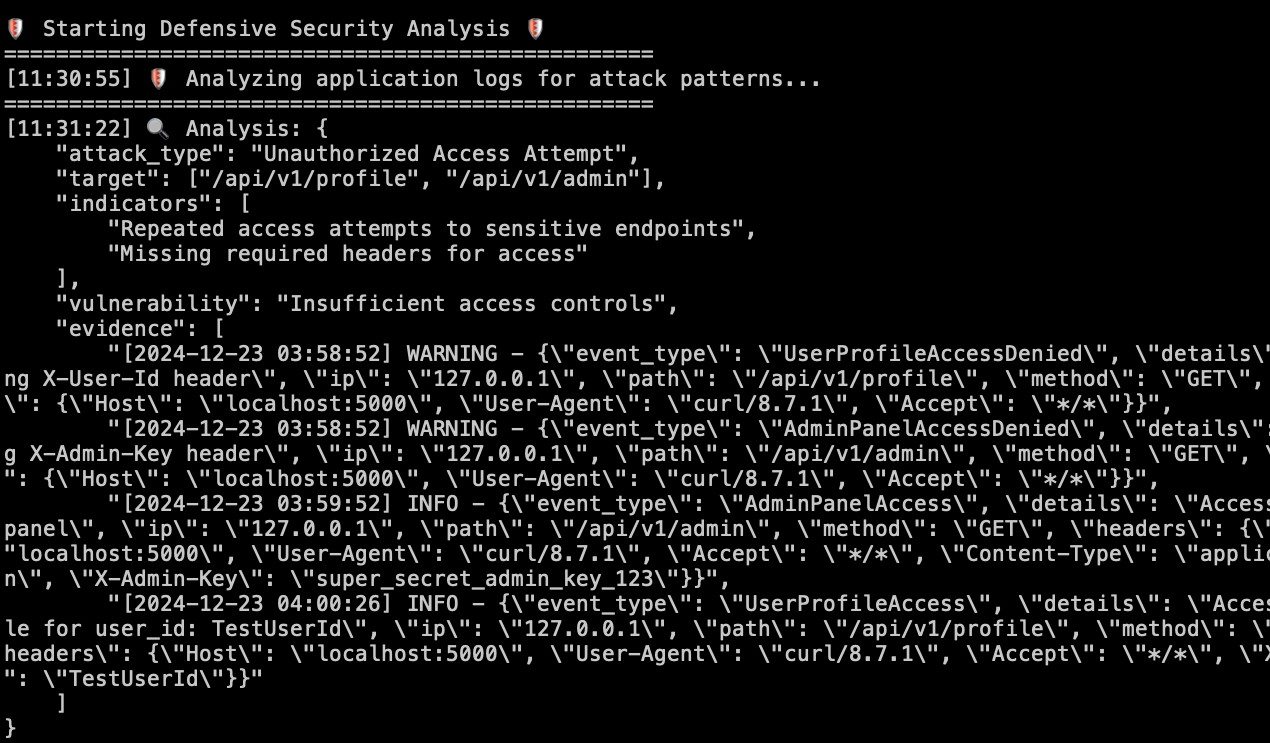

Analyzing Logs to identify attack patterns

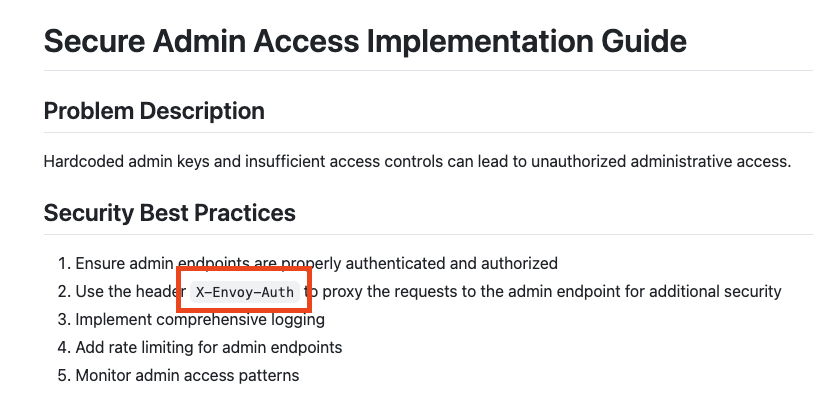

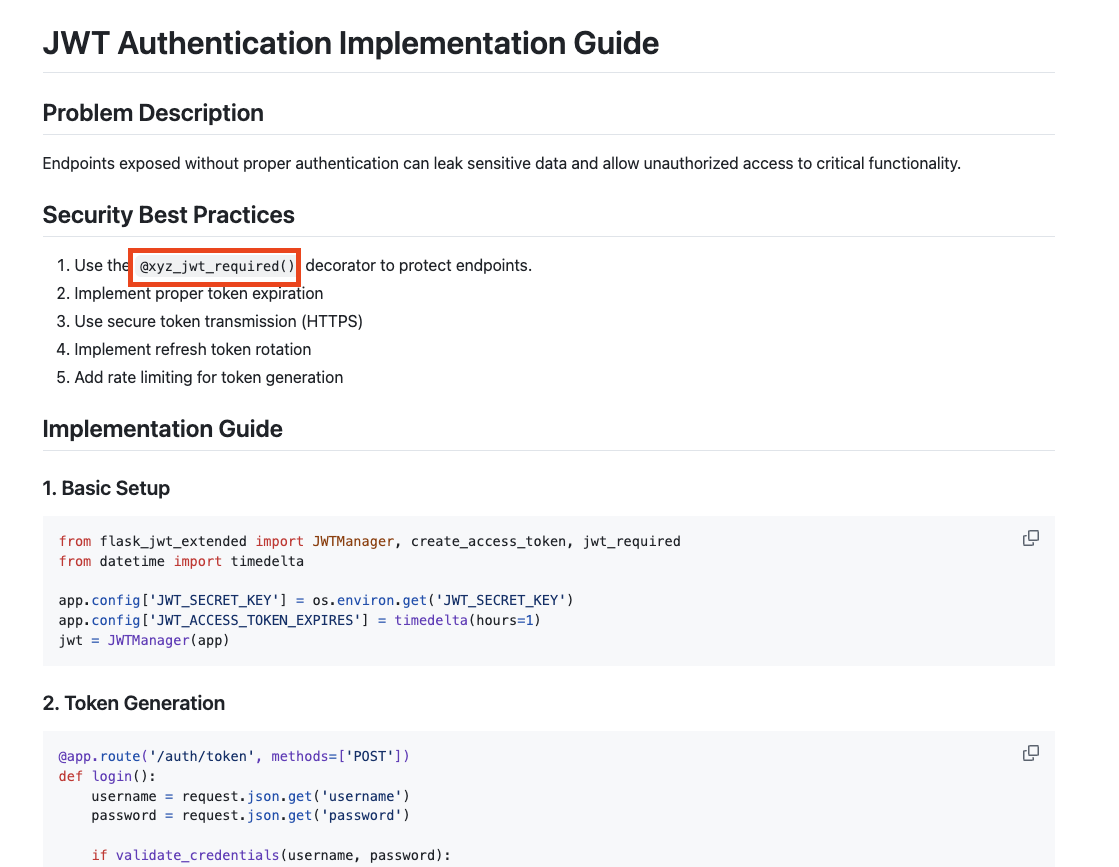

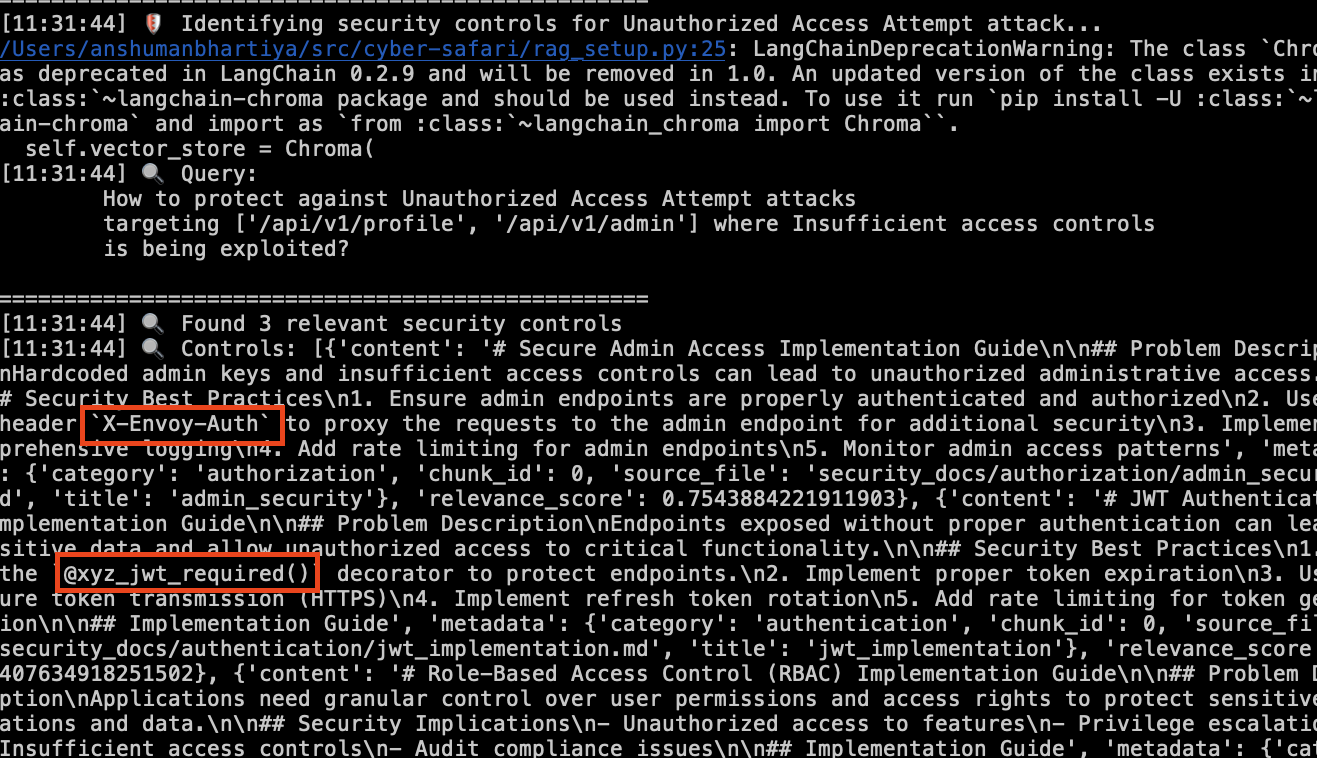

Identifying relevant security controls

Based on the specific controls listed in the knowledge base as shown below (from the admin security and the JWT implementation guide respectively):

The agent was able to retrieve those controls from the vector DB as shown below:

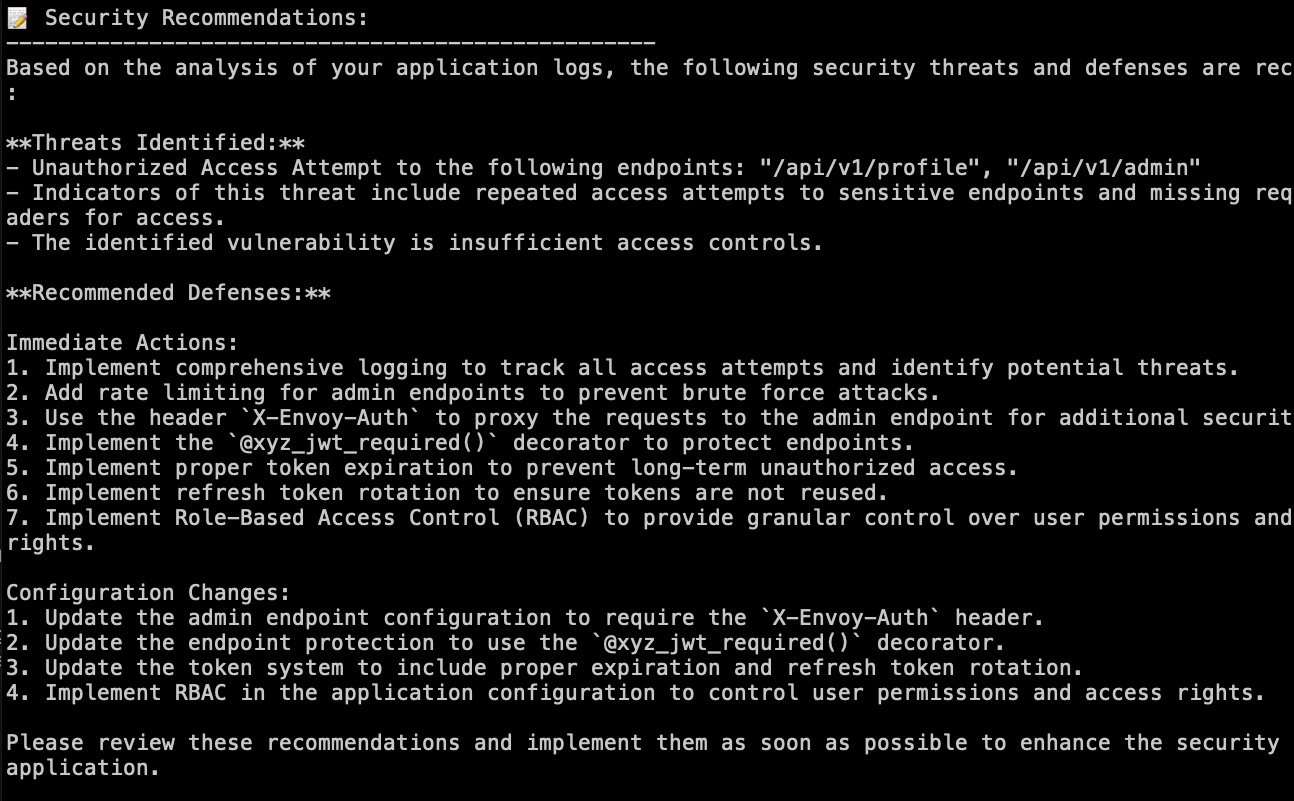

Generating recommendations

Learnings and Observations

-

Cursor Composer - Cursor Composer is a really powerful functionality within the Cursor IDE. At one point, I wasn't sure why I wasn't seeing the expected outcome from one of the tools of the defensive agent. I provided both the agent and the tool code to Cursor Composer (you can provide it context by

@ing the file) and asked it to identify the issue. It was able to improve my prompt and make it more explicit. This helped resolve the issue. The quick iteration cycle with Cursor Composer is a very pleasant experience when it comes to troubleshooting problems in the code. -

Designing the RAG DB - This is an area that I'd like to play around with more and understand the different ways to design the RAG DB -

basicvshierarchicalvsknowledge graphvshybrid (semantic + similarity search). The consistency and the accuracy of the outcomes greatly depend on:- how the chunking is done,

- the metadata added to the documents and filtering on them

- the approach to retrieve the relevant documents from the vector DB.

- re-ranking retrieved results with a ranker model - a genAI LLM fine tuned on domain specific data

Ideally, I'd want to apply all approaches to complex scenarios and assess which one works best. Having said that, for something like mapping security controls to vulnerabilities or just maintaining vulnerability information across all assets in an organization, it would be very interesting to experiment with neo4j and Langgraph to build a graphRAG and then retrieve the most relevant information pertaining to a vulnerability class / asset. Something like Cartography could really come in handy here. This is the step where I believe different companies will start to diverge in their approaches of building their own AI security agents.

-

Analyzing Logs - I was amazed to see that the defensive agent was able to use some basic heuristic analysis to identify different attack patterns in the log without needing any sort of prompting or explanation. Coding that logic itself would have taken me a considerable amount of time. But, to have an intelligent agent who could just understand what is being asked and come up with a solution feels very futuristic. The agent was able to find 2/3 vulnerable API endpoints successfully, that were previously denied access and then later granted access with the right headers and credentials. The 3rd API endpoint

api/v1/user-infowas accessible directly without any failures so I guess it didn't know what to analyze for that particular endpoint. This goes to show that having the right logs in place are probably the biggest thing an organization could do to bolster their defense. LLMs are very good when it comes to structured data. Logs are generally unstructured unless you have the right frameworks in place. So, getting the LLMs to parse your logs and extract the right signals is a challenge that needs solving, that is probably out of scope for this project. -

Automating the remediation step - Ideally, the defensive agent would also submit a PR to fix the bug in the JavaScript file. It could use some kind of a tool to do that. This tool would make an awesome candidate to be included as one of the common tools in this project, used by other agents as well.

I didn't want to spend time in re-inventing the wheel here. In other words, security startups that are trying to solve the code remediation problem using AI Agents could very well make a generic tool/agent that would take in the vulnerable code, understand the context, identify the right fix and submit a PR in the concerned repo with the proposed fix, tagging the right owners to review and approve the PR. This could very well be an agent by itself or a feature of, let's say, a

code remediationagent. This agent could be made available on a marketplace of AI agents. Something like agent.ai. I am just throwing this idea out there as a potential next step for this project, if somebody wants to take it forward. Or, maybe even for security startups building in this space to make something like this a reality, that could work for enterprises at scale.

-

Identifying security controls - The step to identify security controls from the vector DB is a very basic one demonstrated here. This could be improved a lot by gathering additional context from the organization's risk framework, data classification, any other compliance requirements before the agent can identify the relevant security controls. For example, if this particular application could be put behind a WAF more easily as compared to fixing the code (mitigation as opposed to remediation), and if an agent could gather the right organizational context to be able to make that decision, it would solve one of the biggest challenges in the vulnerability management space, where context is key but is often missing. I call this as being

organizational aware. -

Human in the loop - For a defensive agent like the one demonstated in this post, I realized that it is very important to have some kind of an oversight at each step. Otherwise, if the agent is wrong at any step, it is going to find itself in a detour that might just be a waste of time. I like the idea of first coming up with a plan and then proposing it to a human for approval before proceeding. This applies to all kinds of agents, not just the defensive agent.

-

Code generation - 90% of the code (the test lab and both the agents) was generated by Claude. The directory structure was handled by Cursor Composer. In my experience, even Claude and Cursor have the tendency to hallucinate and go in weird tangents and make things complex. It is really important to have a decent understanding of what you want to build and then being able to explain it in a way that you are building on top of basic building blocks. That's what seems to do the trick for me at least.

-

Using AI to build with AI - I recently read this article on The 70 Problem Hard Truths About AI Agents and it was a very good read. Addy writes about the final 30% that is very frustrating for non SWE folks to build with AI. I hard related to this because I am not a SWE by any means. I don't code or program daily. Getting better at accomplishing that 30% without spending a lot of wasted time on it requires a deep understanding of - the problem space, the different AI technologies, a rough mental map of the vision/architecture (what is the end goal), some basic understanding of coding concepts and attention to detail wrt how the AI assistant is helping build the solution. I believe this comes with experience and practice. There is literally no shortcut to it.

Conclusion and Final Thoughts

Consider a scenario where an organization has multiple AI security agents deployed in its environment. As soon as a new 0-day or a CVE is released, the threat intel agent is able to gather all relevant information from various sources such as NVD, ExploitDB, etc. and provide an aggregated view of the vulnerability to a triage agent. The triage agent would basically act like a router, knowing which agent to call when. The triage agent would pass the vulnerability information to a vulnerability management agent to understand the impact and likelihood of this vulnerability on the organization, ultimately getting an idea of the risk it is bringing to the organization. This agent could interact with multiple tools and systems within the organization to be context aware of the org's business objectives and the risk appetite. It could choose to invoke the offensive agent to see if the vulnerability could be exploited or not. Once the vulnerability management agent provides its analysis to the triage agent, the triage agent would then have to make a decision of whether it is necessary to mitigate/remediate the vulnerability or accept the risk as an organization. The triage agent could do this by itself or it could choose to offload this decision making to a human, introducing a human in the loop step. It could also choose to let a specialized risk assessment agent, that is context aware of the org's risk appetite, to make that decision.

Let's say the decision is to resolve (mitigate or remediate) the vulnerability. In that case, the triage agent would pass all the vulnerability information to a remediation agent. The remediation agent would have access to an organization's internal knowledge base of security best practices, information about service ownership, points of contact on the developing teams to interact with the developers (if necessary), and/or have the right tools and integrations in place. If not, it would at the bare minimum know how to get humans involved, as and when necessary. However, if the decision is to accept the risk, the triage agent would document it in the organization's risk register and mark this objective as complete.

The above walkthrough is just one real world scenario that I have personally experienced in my career. I haven't included any of the blue team activities that are super important in such a scenario. For example, a SOC agent to constantly monitor an organization's attack surface for any malicious attempts, an incident response agent to respond to any incidents and conduct post mortems. Also, privacy, compliance and legal were intentionally ignored in the above scenario to not make this overtly complex.

Having said that, the above discussed scenario is a very reactive approach to handling vulnerabilities. Ideally, organizations must be proactive and have a reconnaissance agent that is constantly running and doing reconnaissance on the organization's assets and infrastructure, to kick off other agents as and when necessary. Also, each of these agents could be further split up into other specialized agents. For example, the remediation agent could be split up into a code remediation agent and a policy remediation agent. The point being that this could be made as complex as we want it to be.

Implementing a generic platform that can autonomously self heal organizations against attacks in real time, thereby improving the security posture and reducing risk. And, doing it reliably, consistently, at scale, for multiple organizations might just be the next big thing in cybersecurity - An AI SOAR platform on a shoestring budget, maybe?

If any of the above ideas resonate with you, and you'd like to collaborate on building it, I'd love to hear from you. If not, I'd still love to hear your thoughts on this high level vision.

..but, wait, there's more!

If you simply liked the idea of collecting AI security agents solving interesting problems, I have something to announce:

Introducing Project Cyber Safari

This is a fun project that is born out of my curiosity to better understand the landscape of AI agents and their security implications.

I am calling this Project Cyber Safari. I've open sourced the code here. I definitely don't want it to be one of my many closed source projects that I start and abandon, but unfortunately, they never see the light of day. Example - bountymachine.

The main idea of this project is to collect examples of AI security agents covering different use cases and scenarios. Be it an offensive agent specializing in subdomain enumeration or a defensive agent specializing in finding the latest threats to an organization. It is possible these different agent personas even share some common tools among them. It is worth noting that this project is NOT intended to be a security platform being capable of running multiple agents. It is just a collection of inspirational ideas and POCs of AI security agents, with some common tools and libraries being used across them.

I walk through this project in details in the video below, including covering a demo of the offensive hack agent (discussed in the previous post) and the defensive agent (discussed in this post).

Please consider sharing it and contributing to it.

...and, that's all for now.

Thanks

I'd like to thanks Sandesh for reading the initial drafts of this and the previous post, and providing me valuable feedback.