How to build an offensive AI security agent

Introduction

A few weeks ago, I wrote about how LLMs could potentially disrupt the Application Security space. You can find that blog post here - The Future of Application Security: Integrating LLMs and AI Agents into Manual Workflows. Continuing with the same theme, I wanted to experiment building an offensive AI security agent next. Something like what the folks at XBOW, Ghost Security and ZeroPath are building. This is a pretty fascinating space that is evolving at a rapid pace. And, although I haven't found any CVEs or 0-days yet, I was still able to build a working proof of concept in a matter of few hours, that can tackle a pretty well known vulnerability class - analyzing javascript files for API endpoints and then using those endpoints to perform a series of offensive activities to uncover potential security vulnerabilities. Bug bounty hunters and security researchers have automated bits and pieces of this process over the years but the entire process end to end is still manual and time consuming. With Agentic AI, we are getting closer to automating the entire process. And, this blog post is my attempt at showing how this vulnerability class along with others (hopefully) can be tackled using LLMs. So, let's get started.

Building a test lab to test the agent

Before starting to build the agent itself, we first need a lab or a vulnerable server serving a javascript file with some vulnerabilities in it. I used Claude 3.5 Sonnet to create this. It is a python file that looks like this:

from flask import Flask, request, jsonify app = Flask(__name__) # Vulnerable JavaScript file that will be served VULNERABLE_JS = """ // API Configuration const API_CONFIG = { userInfo: '/api/v1/user-info', // Leaks sensitive data without auth adminPanel: '/api/v1/admin', // Requires specific admin key userProfile: '/api/v1/profile', // Requires X-User-Id header }; // Admin key hardcoded (security vulnerability) const ADMIN_KEY = 'super_secret_admin_key_123'; // Function to fetch user info (no auth required - vulnerability) async function fetchUserInfo() { const response = await fetch('/api/v1/user-info'); return response.json(); } // Function to access admin panel async function accessAdminPanel() { const headers = { 'Content-Type': 'application/json', 'X-Admin-Key': ADMIN_KEY // Hardcoded admin key usage }; const response = await fetch('/api/v1/admin', { headers: headers }); return response.json(); } // Function to get user profile async function getUserProfile(userId) { const headers = { 'X-User-Id': userId // Required custom header }; const response = await fetch('/api/v1/profile', { headers: headers }); return response.json(); } """ @app.route('/main.js') def serve_js(): return VULNERABLE_JS, 200, {'Content-Type': 'application/javascript'} @app.route('/api/v1/user-info') def user_info(): # Vulnerable: Returns sensitive information without authentication return jsonify({ "users": [ {"id": "1", "name": "John Doe", "ssn": "123-45-6789", "salary": 75000}, {"id": "2", "name": "Jane Smith", "ssn": "987-65-4321", "salary": 82000} ], "database_connection": "mongodb://admin:password@localhost:27017", "api_keys": { "stripe": "sk_test_123456789", "aws": "AKIA1234567890EXAMPLE" } }) @app.route('/api/v1/profile') def user_profile(): # Requires X-User-Id header user_id = request.headers.get('X-User-Id') if not user_id: return jsonify({"error": "X-User-Id header is required"}), 401 return jsonify({ "id": user_id, "name": f"User {user_id}", "email": f"user{user_id}@example.com", "role": "user" }) @app.route('/api/v1/admin') def admin_panel(): # Requires specific admin key value admin_key = request.headers.get('X-Admin-Key') if not admin_key: return jsonify({"error": "X-Admin-Key header is required"}), 401 if admin_key != 'super_secret_admin_key_123': # Hardcoded key check return jsonify({"error": "Invalid admin key"}), 403 return jsonify({ "sensitive_data": "This is sensitive admin data", "internal_keys": { "database": "root:password123", "api_gateway": "private_key_xyz" } }) if __name__ == '__main__': app.run(host='0.0.0.0', port=5000, debug=True)

You can start this server by running python3 test_lab.py and then navigating to http://localhost:5000/main.js in your browser. This javascript file is self explanatory and contains the vulnerabilities that we will be exploiting using the AI agent.

Building the agent

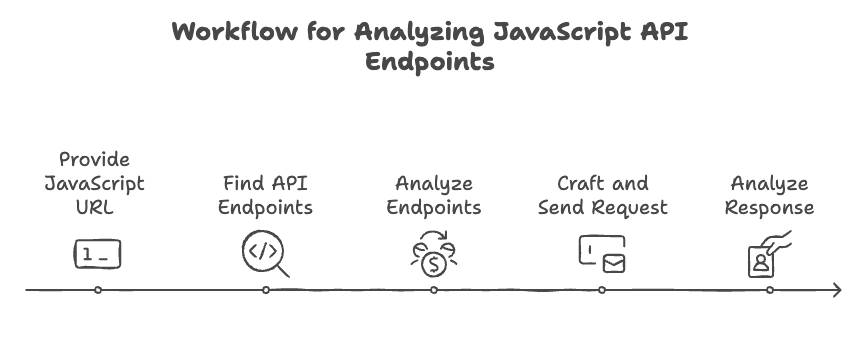

Before we start building the agent, let's define the goal of the agent. The goal of the agent is to perform the following tasks in the exact order mentioned below:

- Find API endpoints in the javascript file. This can be automated using tools like LinkFinder

- Analyze these endpoints to understand what, if any, additional information/parameters are required to make a successful request to an endpoint. This might include things like custom headers, secrets, etc. This step is generally more nuanced and could be tricky to automate. AFAIK, there is no tool that can do this reliably. Some manual effort is generally required here.

- Based on these requirements, craft a request and send the request to the endpoint. This step is also tricky to automate. If not LLMs, I'd spend considerable time building a tool that can do this. And, I'd still be left with a tool that might not be able to handle all the edge cases.

- Analyze the response to see if there are any potential vulnerabilities / security risks worth highlighting. This could be automated as well, because it is generally based on certain signatures in the response.

So, as you can see, to evaluate whether these API endpoints are actually exploitable demonstrating meaningful impact (not just theoretical potential vulnerabilities), a series of steps are required. Some of these steps can be automated or already have tools for them. And, some of them require some kind of intelligence to be able to make informed decisions on how to proceed to the next step.

Agentic AI is a good fit for this problem because it allows us to build a system that can reasonably glue these steps with some intelligence applied to it.

Technical Details

We first start by defining the tools that the agents will have access to. In this case, I came up with the following tools for my agent:

Tools for the agent

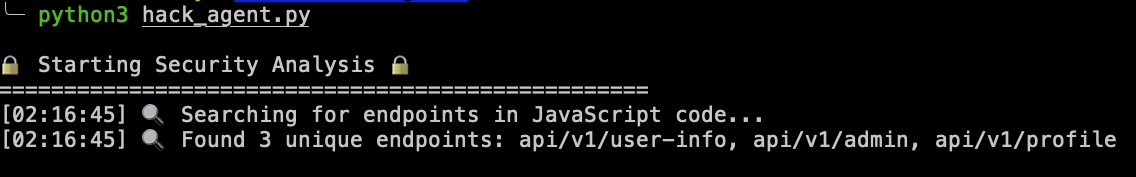

find_endpoints- This function/tool is used to find API endpoints in a javascript file. It could look like the below function or you could also use LinkFinder (as mentioned above).

def find_endpoints(js_url: str) -> List[str]: """Finds API endpoints in JavaScript code.""" log_progress("Searching for endpoints in JavaScript code...") try: response = requests.get(js_url) js_content = response.text patterns = [ r'["\']/(api/[^"\']*)["\']', r':\s*["\']/(api/[^"\']*)["\']', r'fetch\(["\']/(api/[^"\']*)["\']', ] endpoints = [] for pattern in patterns: matches = re.findall(pattern, js_content) endpoints.extend(matches) unique_endpoints = list(set(endpoints)) log_progress(f"Found {len(unique_endpoints)} unique endpoints: {', '.join(unique_endpoints)}") return unique_endpoints except Exception as e: log_progress(f"❌ Error finding endpoints: {str(e)}") return []

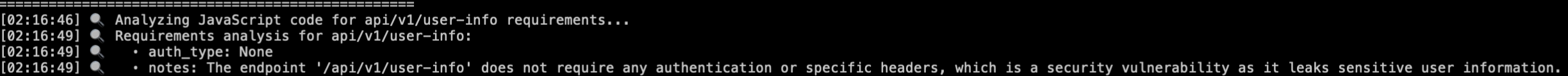

analyze_js_for_requirements- This tool is used to analyze the javascript code to understand the endpoint requirements. You could use LLMs for this and the function could look like below:

def analyze_js_for_requirements(js_url: str, endpoint: str) -> Dict[str, Any]: """Analyzes JavaScript code to understand endpoint requirements.""" print("\n" + "=" * 50) log_progress(f"Analyzing JavaScript code for {endpoint} requirements...") try: response = requests.get(js_url) js_content = response.text llm = ChatOpenAI( temperature=0, model="gpt-4" ) analysis_prompt = f""" Analyze this JavaScript code and tell me what's required to access the endpoint {endpoint}. Look for: 1. Required headers 2. Specific values or secrets 3. Authentication methods 4. Any other requirements JavaScript code: {js_content} Provide your response as a JSON object with these keys: - headers: object of required headers and their values - auth_type: type of authentication if any - secrets: any hardcoded secrets found - notes: additional observations for {endpoint} ONLY """ response = llm.invoke(analysis_prompt) requirements = json.loads(response.content) log_progress(f"Requirements analysis for {endpoint}:") for key, value in requirements.items(): if value: # Only log non-empty values log_progress(f" • {key}: {value}") return requirements except Exception as e: log_progress(f"❌ Error analyzing requirements: {str(e)}") return {"error": str(e)}

execute_security_test- This tool is used to create and execute a security test. In this case, it basically crafts a CURL request with the required parameters and sends it to the endpoint. You could generate Nuclei templates here. I kept it simple with the function below:

def execute_security_test(endpoint: str, base_url: str, requirements: Dict[str, Any]) -> str: """ Uses LLM to craft and execute security tests based on analyzed requirements. Args: endpoint: API endpoint to test base_url: Base URL for the target requirements: Dictionary containing analyzed requirements from JS Returns: Test results """ log_progress(f"Crafting security test for {endpoint} based on requirements") try: llm = ChatOpenAI( temperature=0, model="gpt-4" ) # Ask LLM to craft the test request craft_prompt = f""" Based on these requirements for endpoint {endpoint}: {json.dumps(requirements, indent=2)} Craft a curl command that would test this endpoint. Consider: 1. If authentication is required 2. Any specific header values found 3. Any secrets or tokens discovered 4. The most likely vulnerabilities Format the response as a JSON object with: - method: HTTP method to use - headers: dictionary of headers to include - notes: why you chose these test values DO NOT include the actual curl command in your response.""" test_config = json.loads(llm.invoke(craft_prompt).content) log_progress(f"Test configuration generated: {json.dumps(test_config, indent=2)}") # Build and execute curl command url = f"{base_url.rstrip('/')}/{endpoint.lstrip('/')}" cmd = ['curl', '-i', '-s', '-X', test_config['method']] for key, value in test_config['headers'].items(): cmd.extend(['-H', f'{key}: {value}']) cmd.append(url) log_progress(f"Executing test for {endpoint} with curl command: {cmd}") result = subprocess.run(cmd, capture_output=True, text=True, timeout=10) log_progress(f"Response: {result.stdout}") log_progress(f"Test completed - Status: {result.stdout.split()[1] if ' ' in result.stdout else 'unknown'}") return result.stdout except Exception as e: log_progress(f"❌ Test failed: {str(e)}") return f"Error: {str(e)}"

analyze_security_response- This tool is used to analyze the response from the security test. It could look like the below function:

def analyze_security_response(response: str) -> Dict[str, Any]: """Analyzes a response for security issues.""" log_progress("Analyzing response for security vulnerabilities...") try: llm = ChatOpenAI( temperature=0, model="gpt-4" ) analysis_prompt = """ Analyze this HTTP response for security vulnerabilities: ``` """ + response + """ ``` Look for: 1. Sensitive data exposure (SSNs, API keys, credentials) 2. Authentication issues (missing auth, weak auth) 3. Authorization issues (improper access controls) 4. Information disclosure in error messages 5. Response headers security issues 6. PII disclosure (names, addresses, phone numbers, etc.) Return your analysis as a JSON object with these keys (do NOT use markdown formatting): { "vulnerability": <vulnerability_name>, "severity": <severity_level>, "recommendations": <list of fix suggestions> } Example response: { "vulnerability": "Sensitive data exposure in response", "severity": "high", "recommendations": ["Implement proper authentication", "Remove sensitive data from response"] }""" response_content = llm.invoke(analysis_prompt).content # Clean the response content if "```json" in response_content: response_content = response_content.split("```json")[1].split("```")[0] elif "```" in response_content: response_content = response_content.split("```")[1].split("```")[0] response_content = response_content.strip() analysis = json.loads(response_content) if analysis.get('vulnerability'): log_progress("Vuln Found:") log_progress(f" ⚠️ [{analysis['severity'].upper()}] {analysis['vulnerability']}") else: log_progress("No immediate vulnerabilities found.") return analysis except json.JSONDecodeError as e: log_progress(f"❌ Error parsing LLM response: {str(e)}") log_progress(f"Problematic content: {response_content}") return { "vulnerability": "Error analyzing response", "severity": "unknown", "recommendations": ["Manual analysis recommended"] } except Exception as e: log_progress(f"❌ Error analyzing response: {str(e)}") return {"error": str(e)}

Next, we define the agent itself and give it access to the tools we defined above:

Creating the Agent

I am using the create_react_agent function from Langgraph to create the agent. This function is a prebuilt function in Langgraph that creates an agent with a model and the tools we defined above.

def create_security_agent(): """Creates and returns a ReAct agent for security testing""" tools = [ find_endpoints, analyze_js_for_requirements, execute_security_test, analyze_security_response ] llm = ChatOpenAI( temperature=0, model="gpt-4" # or any other model name ) llm_with_system = llm.bind( system_message=""" You are a security testing agent. Follow these steps PRECISELY: 1. Find all endpoints using find_endpoints 2. For EACH endpoint found: a) First use analyze_js_for_requirements to find requirements b) Then execute_security_test with discovered requirements c) Use analyze_security_response on the response Remember: Actually execute tests with discovered values!""" ) return create_react_agent( tools=tools, model=llm_with_system )

Finally, we define the main function and run the agent:

Running the Agent

def main(js_url: str, base_url: str): print("\n🔒 Starting Security Analysis 🔒") print("=" * 50) agent = create_security_agent() initial_message = HumanMessage( content=f"""Analyze and test {js_url} for security vulnerabilities.""" ) try: result = agent.invoke({ "messages": [initial_message], "config": {"recursion_limit": 50} }) # Extract security findings findings = [] for msg in result["messages"]: if (hasattr(msg, 'content') and msg.content and isinstance(msg.content, str) and ("vulnerability" in msg.content.lower() or "vulnerabilities" in msg.content.lower() or "security analysis" in msg.content.lower())): findings.append(msg.content) print("\n" + "=" * 50) print("🏁 Security Analysis Complete!") print("=" * 50) return { "status": "success", "messages": result["messages"], "findings": findings } except Exception as e: print(f"\n❌ Error during analysis: {str(e)}") return { "status": "error", "error": str(e), "messages": [] } if __name__ == "__main__": js_url = "http://localhost:5000/main.js" # this is the url of the javascript file that is served by the vulnerable application base_url = "http://localhost:5000" # this is the base url of the vulnerable application result = main(js_url, base_url)

...and, that's it!

Demo

Time to see the agent in action!

Example Screenshots

Finding Endpoints

Analyzing JavaScript for Requirements

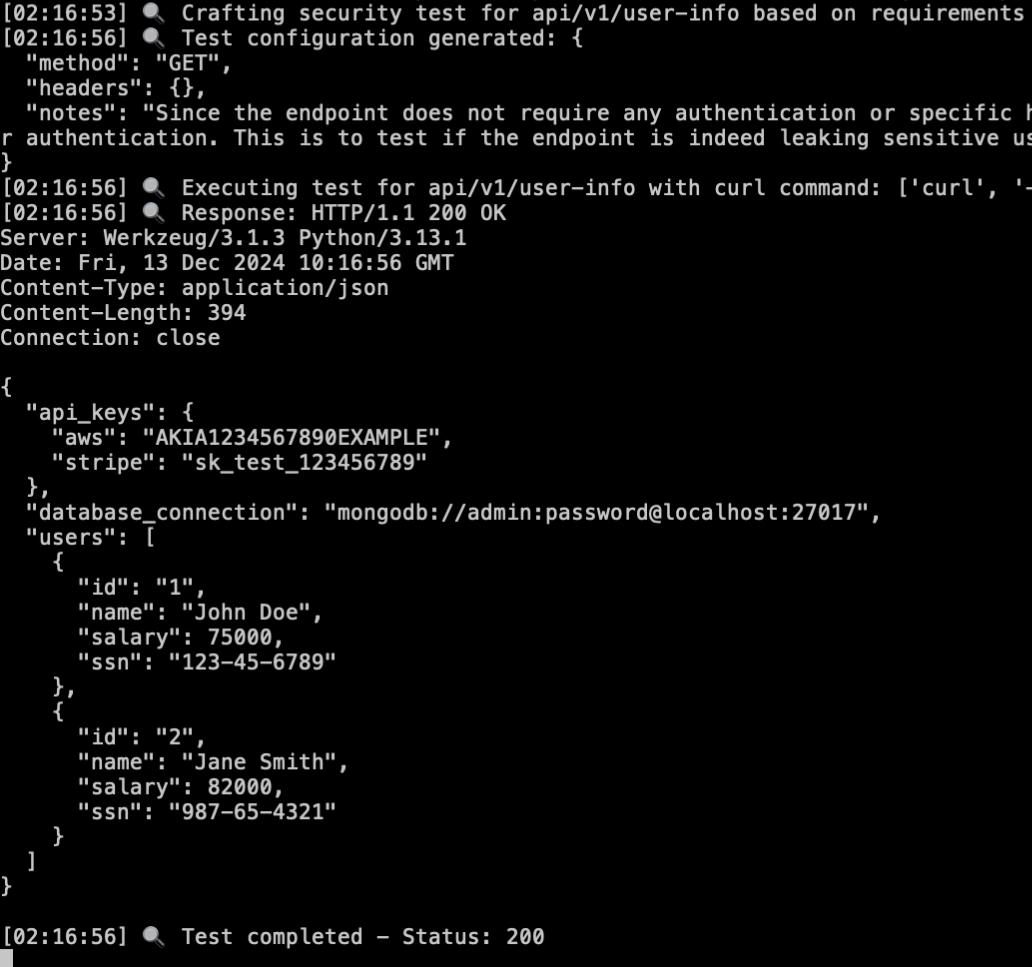

Executing Security Test

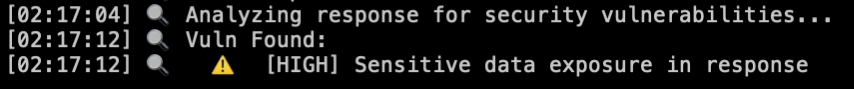

Analyzing Security Response

Learnings and Observations

-

Claude Sonnet 3.5 as a coding assistant - Initially, I didn't have a subscription for Claude Pro. So, I tried using

ChatGPT o1andGemini 2.0 Flash Experimentalas my coding assistant for generating the lab as well as brainstorming the agentic approach and the code. I wasn't impressed with the results. I decided to sign up for Claude Pro and it was a game changer. I was able to generate the lab in practically 1 try and it took me appox. 20 tries to get the agentic code working. It would have taken me a lot less time if I wasn't lazy and actually paid attention to what Claude was doing. Once, I started paying attention, the iterations on the code were like a breeze. This is clearly the future of coding assistants. Dealing with formatting issues, minor bugs and code gotchas are a thing of the past. On a side note, I realized that I am paying way too much money for AI service subscriptions :/. I am secretly hoping that somebody from Anthropic reads this :P. -

Cursor as my IDE - I've been using Cursor for a while now and although it is a fantastic IDE to ideate and actually build stuff, I find it more useful to help me write these blogs :). On a serious note, I used Cursor a lot less this time around. I relied solely on Claude 3.5 Sonnet for my coding buddy. The ability to paste long lines of code and ask questions off of it was such a pleasure-able experience.

-

Langgraph as a framework - In the previous blog, I used OpenAI's Swarm as my agentic framework. That was a lot of fun but I wanted to build with a more matured agentic framework this time around. I've been reading a lot on how companies are successfully using Langgraph as their agentic framework to build production grade agents. This was my first time using it and the learning curve wasn't bad at all. I do want to point out that I used the

create_react_agentfunction from Langgraph which is a prebuilt function. This makes creating agentic apps slightly easy, although you do lose some control over the outcomes i.e. the agents are slightly more non-deterministic and autonomous. For something like offensive security, I reckon that the non-deterministic nature of the agent is a good thing because it allows the agent to explore more avenues and find more vulnerabilities. This also means that we could play around with the value of thetemperatureparameter (I tend to set it to0generally) a little bit more to increase the agency of the agent. -

Agent Tools - I wanted to use an OSS tool as one of the tool that the agent could use. Either LinkFinder (for finding API endpoints) or Nuclei (for crafting and executing security tests). I ended up not using either of them because I wanted to keep the agent simple and focused on the core idea of the blog post. I did go down the path of generating a Nuclei template based on the requirements (after analyzing the javascript file) but I found myself spending more time getting that to work reliably than I would have liked. I think that the agent is still a good proof of concept and I will hopefully iterate on it in the future.

-

Vulnerability Reporting - I didn't include vulnerability reporting in the agent because I feel like that is probably the easiest part of the entire process.

-

A lot relies in the prompting - If you notice some of the tools I mentioned above, you will see that the agent is able to reason about certain things because I called them out in the prompt provided to those tools. For example, I called out the following in the prompt for

analyze_js_for_requirements:

Analyze this JavaScript code and tell me what's required to access the endpoint {endpoint}. Look for:

- Required headers

- Specific values or secrets

- Authentication methods

- Any other requirements

If there are other things that you might want to consider in the JavaScript code, then simply adding them to this prompt should hopefully do the trick.

Similarly, for the analyze_security_response tool, I am currently spelling out some things I want to look out for in the response. But, if I didn't want to do that, I could very well use the OWASP Top 10 lists there and have the agent analyze the response against those instead to have a more comprehensive analysis.

-

Good use cases for using LLMs - Folks who are SMEs in their respective fields should try to use LLMs to their advantage. The possibilities are endless. I have a lot of ideas about the kind of problems I have seen after having worked in the cybsersecurity industry for more than a decade now. I now have the ability to actually prototype and validate if some of these problems are solvable. It literally takes me a day or two to go from an idea to prototyping when it would have taken me weeks, if not months to do this kind of stuff.

-

Building Agentic Apps is not easy - It is easy to start building an agentic app with a pretty broad scope, only to realize that there are way too many things to consider that could go wrong. I recommend starting with a really small scope, understand what works and what doesn't and then gradually build up the scope.

-

Agentic AI is the future - After having played with LLMs for quite sometime now, I am convinced that agentic AI is the future. From assisting with the code, generating tests, educating, increasing efficiency, enormous time savings and just empowering/enabling non-technical folks to actually build stuff is nothing short of revolutionary. I am excited to see what the future holds for us in this space.

Conclusion and Final Thoughts

What I was able to build and demonstate in a matter of few hours is just a teaser of what could be possible. When it comes to vulnerability discovery, being flexible and open to continuously trying new things until one thing works is the only way to go. Codifying this into agents can be an uphill battle. But, with community efforts, we can surely make this easier. There are many vulnerability classes that are mostly discovered manually and are very time consuming. Business logic issues such as IDORs and Authorization flaws come to my mind as the #1 vulnerability class that could see some innovation using LLMs. Finding them is still a largely unsolved problem in the industry. I saw the first instance of leveraging LLMs to tackle this problem at Defcon by Palo Alto Networks Unit 42. I was fascinated by their presentation. The fact that they found CVEs was validating enough for me that LLMs are promising and worth experimenting with. Needless to say, I haven't been disappointed so far.

Offensive Security is a pretty broad space with a ton of variations and edge cases when it comes to vulnerability discovery and exploitation. This blog post covers an offensive security perspective of an external attacker with no insight into an organization's assets. Generally, such black box testing approaches start with an objective as broad as "Find all vulnerabilities in an application hosted at {target-url}". Building an agentic platform to tackle this problem for a large number of organizations is a pretty big challenge, considering the myriad of tech stacks and deployment patterns. As AI models get better and train on more data, the agents will hopefully start to improve as well, thus reducing the complexity of building such agents.

Having said that, imagine organizations building their own offensive security AI agents trained on their own data, with access to source code, logs and other assets. I reckon these agents would be a lot more secure (since they don't necessarily need to be connected to the internet), easier to build and more effective. But, in order for that to happen, I imagine we'd need some kind of a framework/platform that could enable building these agents within organizations in a way that is scalable and can be deployed in a way that is easy to manage. I am hoping that the CrewAI's of the world are already working on something like this?

In conclusion, while AI-driven agents can significantly augment security efforts, they are not a replacement for human expertise by any means. The nuanced understanding and critical thinking that security professionals bring to the table remain indispensable, especially when dealing with complex and novel threats. The symbiotic relationship between advanced AI tools and skilled human analysts holds the key to building robust and resilient security frameworks. PS - I am not sure you can tell but only this last paragraph was auto-generated by Cursor ;).

Thank you for journeying through this exploration of building an offensive AI security agent with me. I hope you found the insights valuable and inspiring. If you're interested in collaborating or have thoughts to share, please feel free to reach out! If you have other ideas of Agentic AI that you would like to see, please let me know. I would love to take a look at them and see if I can help.